Here, instead, I wish to summarize the other poster I presented at the same venue, which concerned the combination of the most sensitive search channels, the sensitivity of CMS with a given amount of data, and the derating of its significance reach or observation power entailed by the running of LHC at a smaller-than-design beam energy. But I will do this only as a way of introducing a more interesting discussion, as you will see below.

The Poster

Below is a thumbnail (well, a reduced version anyway) of the poster discussed in this article. For a full-sized powerpoint version, click on it.

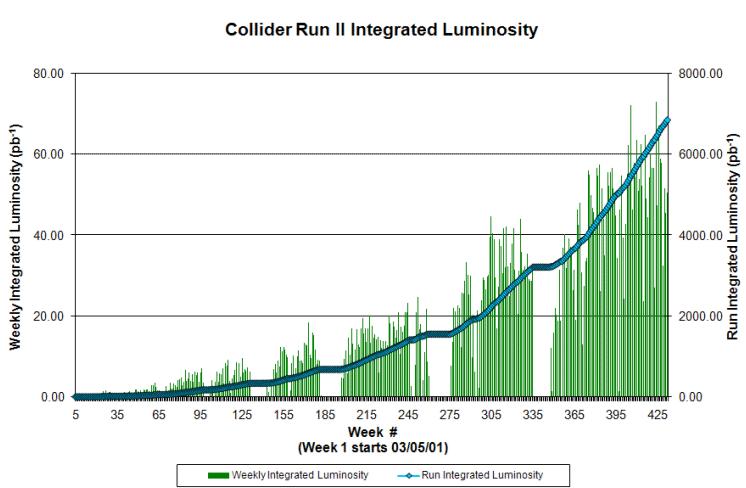

The Standard Model Higgs boson is being presently sought only at the Tevatron collider, which is collecting data at a formidable pace: the figure on the right shows the integrated luminosity delivered by the machine as a function of time (the curve joining blue diamonds), as well as the integrated luminosity per week (the narrow vertical bars, whose reference scale is on the vertical axis on the left). It is clear that the machine is delivering with high performance, as about 60 inverse picobarns of data get added every week to the two experiments' bounties of over 6,000.

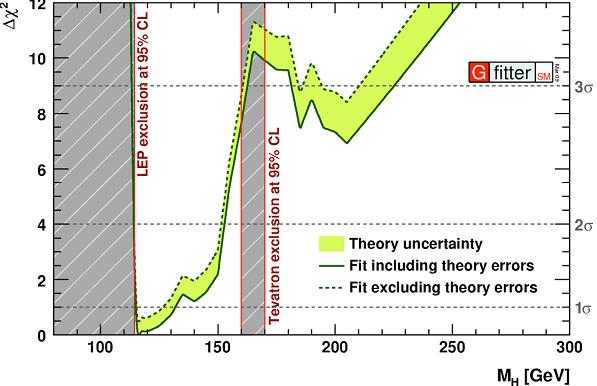

The Standard Model Higgs boson is being presently sought only at the Tevatron collider, which is collecting data at a formidable pace: the figure on the right shows the integrated luminosity delivered by the machine as a function of time (the curve joining blue diamonds), as well as the integrated luminosity per week (the narrow vertical bars, whose reference scale is on the vertical axis on the left). It is clear that the machine is delivering with high performance, as about 60 inverse picobarns of data get added every week to the two experiments' bounties of over 6,000. So far, the Tevatron search has excluded at the 95% confidence level (that is, at better than one-in-twenty odds) the region between 160 and 170 GeV of Higgs boson masses. Together with previous inputs, this creates a suggestive convergence of indirect information and direct limits, which seems to force us to place our chips in the region between 115 and 150 GeV as the most probable hiding spot of the phantomatic particle: the best summary of that convergence is provided by the Gfitter results shown on the left. There, as a function of the boson's mass (on the horizontal axis), direct and indirect constraints are merged in a combined Delta-chisquared curve. The more the curve departs from zero, the less likely a given Higgs mass is.

So far, the Tevatron search has excluded at the 95% confidence level (that is, at better than one-in-twenty odds) the region between 160 and 170 GeV of Higgs boson masses. Together with previous inputs, this creates a suggestive convergence of indirect information and direct limits, which seems to force us to place our chips in the region between 115 and 150 GeV as the most probable hiding spot of the phantomatic particle: the best summary of that convergence is provided by the Gfitter results shown on the left. There, as a function of the boson's mass (on the horizontal axis), direct and indirect constraints are merged in a combined Delta-chisquared curve. The more the curve departs from zero, the less likely a given Higgs mass is.In February 2009 a meeting was held in Chamonix to determine the physics potential as a function of delivered luminosity of a LHC run at a reduced beam energy, and to discuss in the light of that what were the best options for LHC startup. Results on Higgs searches were presented there by CMS with two different scenarios: a running at 14 or 10 TeV of center-of-mass energy, with one inverse femtobarn of collected proton collisions.

As you go down in collision energy, different physical processes get their production probability affected non trivially. All of them decrease, but processes which depend on the presence of high-energy gluons in the initial state of the collision, such as Higgs decay, get hit harder than ones sustained by the annihilation of quark-antiquark pairs, for instance: that is because gluons tend to be softer inside the proton, so the probability of finding ones carrying enough energy for the creation of a high-mass particle is more critically dependent on the proton beam energy.

The CMS experiment used the predictions of its well-oiled searches for H->WW and H->ZZ decay signatures to produce a combination: how much of the parameter space spanned by the Higgs boson mass could be excluded ? And what significance would a signal show, if it were there ? The results were computed with two different (approximated) statistical methods, mimicking the present situation at the Tevatron, where both a pseudo-frequentist CLs method and a Bayesian calculation are performed to extract Higgs boson mass constraints. The two methods were shown to give consistent results within 10%, a satisfactory check. A one/fb of data at 14 TeV is shown in the figure on the right to be enough for an exclusion at 2-sigma level of the mass range between 140 and 230 GeV, while if the beam energy is 10 TeV the region likely to be excluded in the absence of a Higgs boson shrinks sizably. It was assessed that the lowering from 14 to 10 TeV affects the search with a reduction in sensitivity such that about twice as much data is needed at the lower energy to produce the same results of the 14 TeV scenario.

The studies also showed that a discovery of the Higgs at over 5-sigma significance in the 1/fb, 14 TeV case is possible by CMS alone with the H->WW decay, but in order for that to happen, the Higgs mass must be in the range of mass which the Tevatron has already excluded. However, significant results may still be obtained in case the Higgs boson is outside that region but not too far from it. The figure on the right shows how the significance of a Higgs signal in the WW final state depend on Higgs mass, for 14 TeV running and one inverse femtobarn of analyzed data.

A critical scenario

As the most informed among you know, a revised schedule for startup of the Large Hadron Collider at CERN has been released a few weeks ago. The decision has been taken to start proton collisions at the reduced beam energy of 3.5 TeV, thus creating 7-TeV collisions in the core of the detectors.

The current schedule is probably still liable to last-minute changes, especially as far as the developments in 2010 are concerned, when after five months of 7 TeV collisions it is possible that the beam energy will be cranked up and 10 TeV collisions will be produced for the remainder of the run. In such a quickly changing situation, it would appear a somewhat vacuous occupation to accurately evaluate the LHC discovery prospects for the Higgs boson: we are looking at a situation where the unknown physical parameter of interest -the Higgs mass- is joined by other unpredictable parameters: man-made decisions. The latter are notoriously much harder to model!

I wish to take a different stand here: I believe it is actually quite important to try and find out whether the current schedule, if realized in 2010, would put the LHC experiments in the lead for a Higgs discovery over the Tevatron experiments. For that purpose, I will make a back-of-the-envelope estimate of a critical situation.

Imagine the Higgs boson has a mass of 150 GeV, and imagine that in September 2010 the CERN management has to decide whether to switch to heavy nuclei running -as is now planned- forcing the Higgs search to a long pit-stop in a situation where the Tevatron starts to see some hint of a signal, and where CMS and ATLAS also see something vaguely exciting at the same mass, by analyzing the data they collected until then.

The decision would be a quite tough one to take -possibly tougher than the one by which CERN shut down the LEP II collider eight years ago, leaving a claimed 3-sigma evidence for a 115 GeV Higgs hanging in the air (a number which, however, later deflated to 1.7-sigma, casting a suspicion of handcrafting on the earlier estimate). While, in fact, the LEP II shutdown did not appear to imply that the Higgs boson would be at risk of leaving CERN, the stopping of LHC proton collisions in the scenario considered above would mean handling to the Tevatron the jus primae noctis with the Higgs boson on a silver platter. That is because the fixing of the many electrical connections still in need of servicing, the warm-up and cool-down procedures, and the rest of unavoidable delays that the LHC would inflict to itself would amount to yielding the Tevatron another full year of advantage, in a moment when the American machine would be probably running at record luminosities, with its two blood-thirsty experiments justly willing to add a sparkling new jewel to the array already adorning the crown of their twenty-year-long history.

So, let us first evaluate what signal might be seen by the CDF and DZERO experiments in August 2010. I predict that by then the experiments will have collected about 8.5 inverse femtobarns of collisions, and they will be in possession of results based on data collected until early winter 2010, i.e. probably 7/fb each.

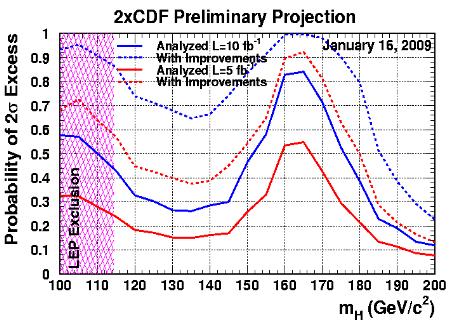

If a Higgs of 150 GeV is what Nature chose for our Universe, by combining their 7/fb datasets CDF and DZERO would have a 30% to 65% chance to observe an excess at the 2-sigma level, as shown by the figure on the right (7 is almost halfway between the 5/fb red curves and the 10/fb blue curves). Bear in mind that this is just an eyeballing estimate of the most likely outcome of the experimental searches: 3-sigma would be less likely, but possible if a positive fluctuation of the data occurred; 1-sigma would similarly be possible.

If a Higgs of 150 GeV is what Nature chose for our Universe, by combining their 7/fb datasets CDF and DZERO would have a 30% to 65% chance to observe an excess at the 2-sigma level, as shown by the figure on the right (7 is almost halfway between the 5/fb red curves and the 10/fb blue curves). Bear in mind that this is just an eyeballing estimate of the most likely outcome of the experimental searches: 3-sigma would be less likely, but possible if a positive fluctuation of the data occurred; 1-sigma would similarly be possible.And what would CMS see then ? First of all, we need to estimate the integrated luminosity that will be delivered by the LHC in the course of the first 10 months of running. From the LHC commissioning web page, one infers that a total of about 40/pb will be collected at the energy of 7 TeV in the first few months, followed by about 270/pb at 8 or 10 TeV energy -let us make it 10 TeV for the sake of argument.

We can then neglect the first data taken at 7 TeV, which would be adding very little and would thus be likely omitted by the first analyses. Then we also need to scale down the integrated delivered luminosity by a factor of roughly 0.8: this is due to the fact that at start-up, it is not just the accelerator which requires tuning, but the detectors as well, and the live time during which they will manage to take data will necessarily be smaller than 100%.

All in all, we are looking at about 200/pb of good, analyzable data per experiment at 10 TeV. By doing some eyeballing and using the results presented in the poster shown above, it is possible to guesstimate that with that much (or that little) data CMS will have a chance of reaching a 1 to 1.5 standard deviation excess by combining its WW and ZZ search channels. A combination of CMS and ATLAS should then have a fighting chance of reaching out to 2 standard deviations.

With two LHC experiments showing first hints of a Higgs at 150 GeV, and two Tevatron experiments showing a similar signal, it would only be with a quite heavy heart -and the stepping over a good part of the four thousand physicists in CMS and ATLAS- that the CERN management could decide to stop running proton beams through the ring: the chance that the Tevatron would then capitalize on the additional year of advantage granted by a LHC shutdown, ending up with some luck as the first laboratory to claim a strong evidence for the Higgs, would be quite concrete.

Conversely, the continuation of the run would likely see the rate of collection of LHC data growing, as a result of better and better understanding of the optimal working points of the machine beam parameters. One more year of data at 10 TeV would delay the required repairs needed to go up in energy, but would allow the collection of maybe 1.5 to 2 inverse femtobarns of data. And such an eight-fold increase in statistics as compared with the 200/pb of late 2010 would then be just enough to make an observation-level result a likely result for the combined CMS and ATLAS searches.

If we look back at 2001, we realize that the decision to shut down the LEP II collider was imposed to the CERN management by the need to avoid a further delay of the LHC schedule -a machine which promised, and still promises, to revolutionize our understanding of subatomic physics. No such constraint is present in the scenario considered above, so I would guess that the second of the two alternatives I described would be taken: LHC would continue to run.

If you ask what I think about the whole matter, however, you would be in for a surprise. I do not believe that it may be considered a scientific asset the fact that one laboratory rather than its competitor wins the race to a big discovery. What matters most to me is that the discovery is made. This, and the fact that I sign papers from both CDF and CMS, makes me a perfectly seraphic observer in this intriguing race between Fermilab and LHC!

Conclusions

The LHC schedule has changed so often in the course of the last five years or so, that it would be quite naive to attach much meaning to very definite scenarios as far as the discovery of the Higgs boson is concerned. Nevertheless, the competition between Tevatron and LHC on that particular goal is overt, and it is thus not too idle an occupation discussing the possible situations in which the High-Energy Physics community might find itself next year.

The Tevatron, by itself, will never manage to produce a true observation-level significance for a Standard Model Higgs boson of standard properties: the limitation is due to the small signal-to-noise ratio of its search channels. However, a three-sigma evidence is at reach. The symbolic value of a first evidence in the United States would be great, and it would be felt as a significant failure of the CERN endeavour in the eyes of the media and the general public.

It appears thus quite possible that what drives the future LHC schedule will not be another beam incident, but actually the quality of the first data from the LHC experiments. I am tempted to conclude that the next few years will be quite interesting for HEP, but this is a rather empty statement: a bit like saying that Supersymmetry is just around the corner -as Veltman notes, in fact, it has been hiding there for quite a long time now.

Disclaimer of liability

I do disclaim thee, oh Liability!

Despite the fact that the above poster is official CMS material, the interpretation discussed in this article is entirely based on my personal views, which do not in any way represent those of the CMS collaboration. The very same argument is valid for the CDF and DZERO predictions.

Comments